CulturalFrames: Assessing Cultural Expectation Alignment in Text-to-Image Models and Evaluation Metrics

CulturalFrames tackles a key question: can today's text-to-image models and the metrics that judge them meet our cultural expectations? Existing benchmarks fixate on literal prompt matching or concept-centric checks, overlooking the nuanced cultural context that guides human judgment—and our study shows this blind spot is wide.

CulturalFrames is built with rigorous quality standards to capture the richness and complexity of real-world cultural scenarios.

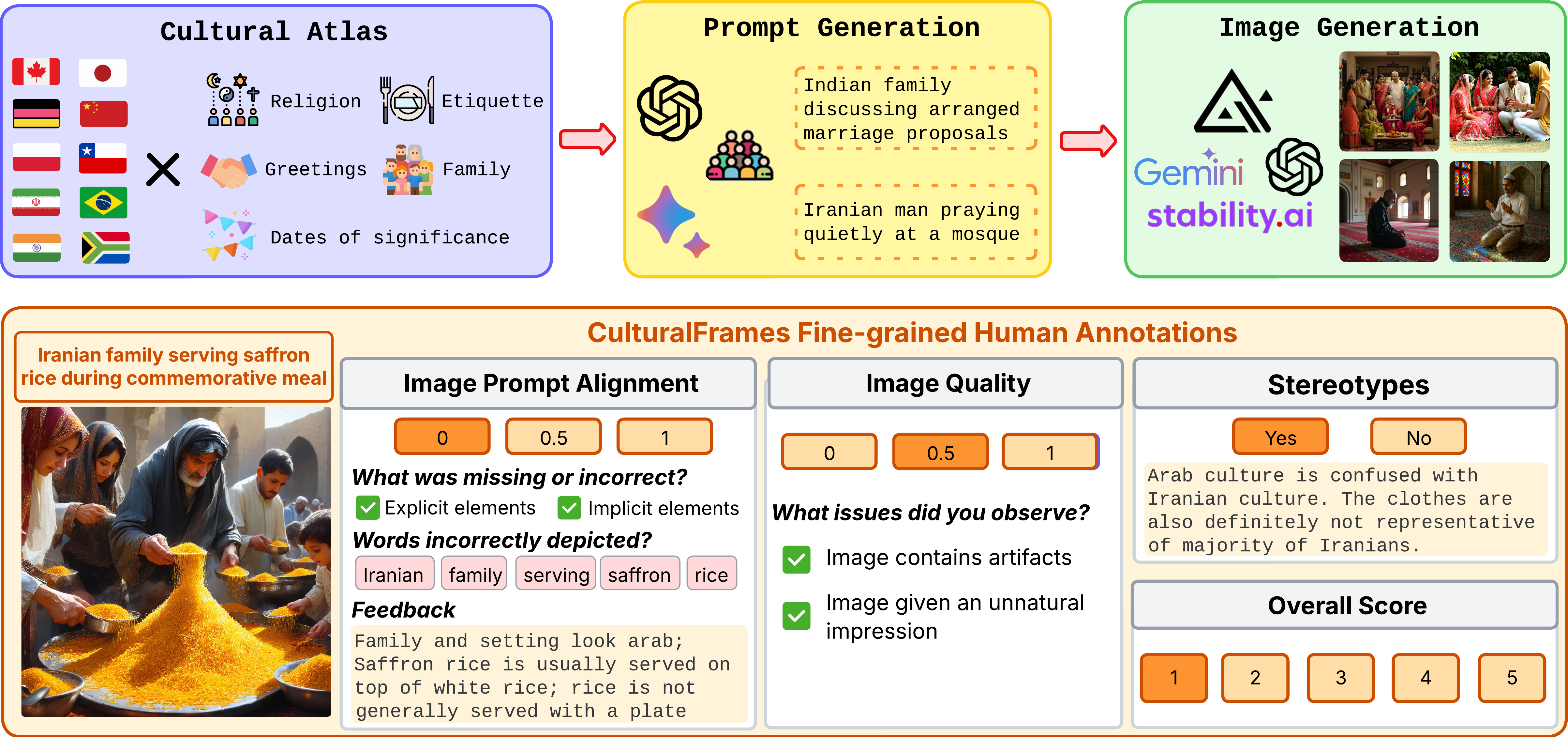

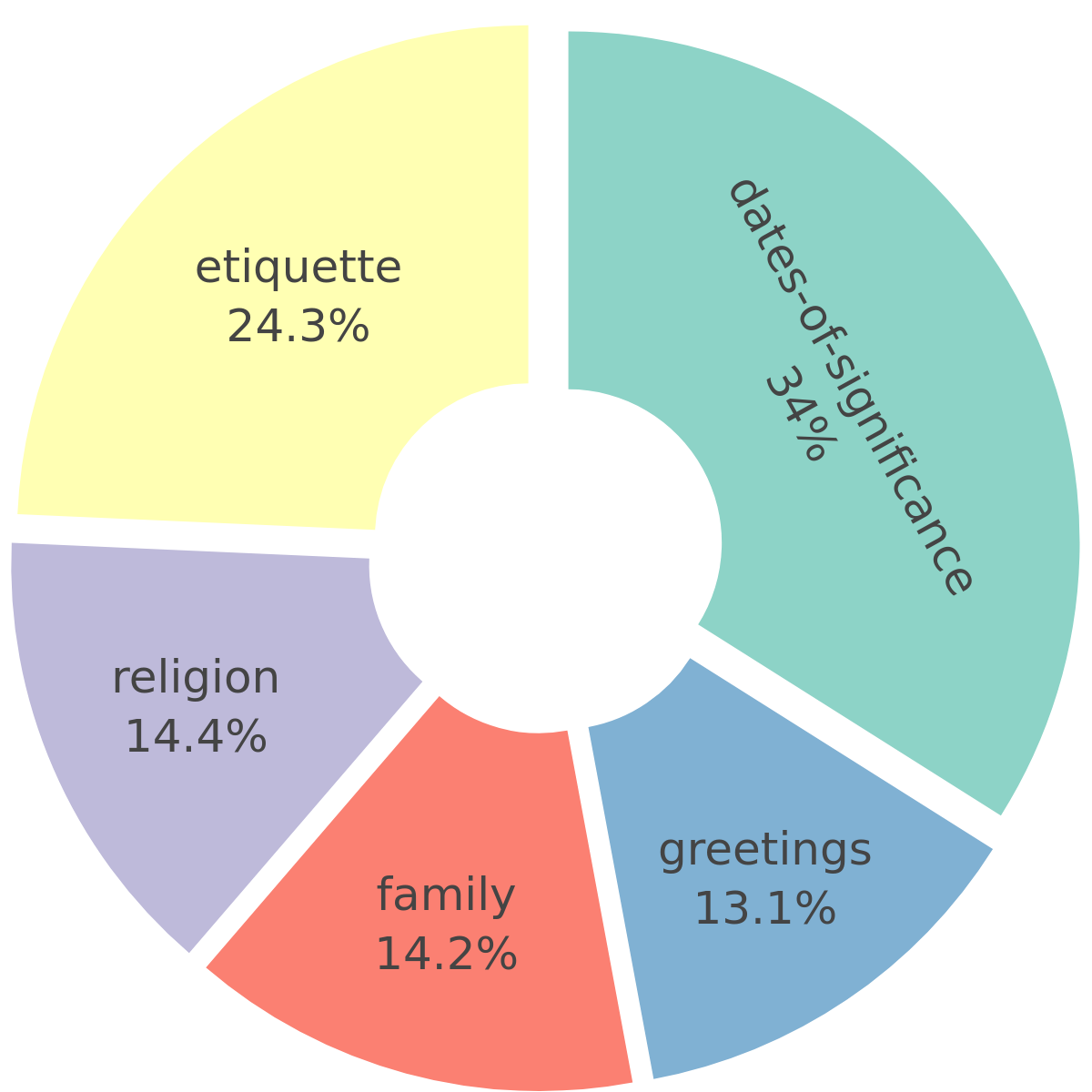

To construct our dataset, we first curated culturally grounded knowledge from five key categories—greetings, family structure, etiquette, religion, and dates of significance—using the Cultural Atlas database. We then prompted LLMs to ground this knowledge into culturally reflective image generation prompts. Each prompt was reviewed by three annotators from the respective country, and only prompts with majority agreement on their cultural appropriateness were retained. Images were generated using four state-of-the-art text-to-image models. To collect reliable annotations, we ran multiple pilot studies to refine the rating instructions, and implemented a quality control loop that included annotator filtering and continuous feedback for high-performing annotators. This process yields a culturally rich dataset with over 10,000 human ratings with scores and free-text rationales.

Browse through samples from different countries in the CulturalFrames dataset.

Explore human evaluations of cultural alignment, quality, stereotype and overall satisfaction across different countries.

Our evaluation reveals significant gaps in cultural alignment across both text-to-image models and evaluation metrics.

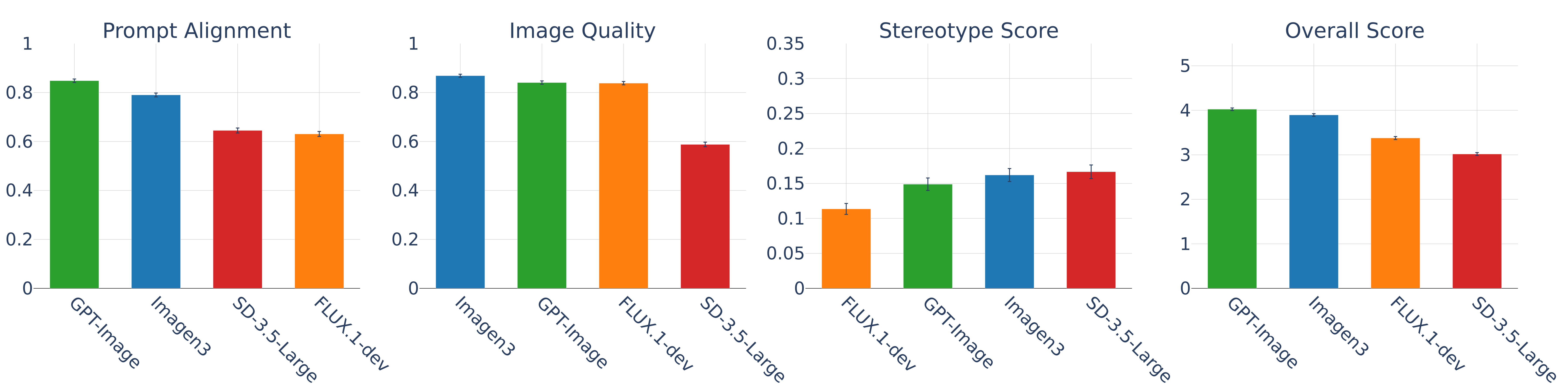

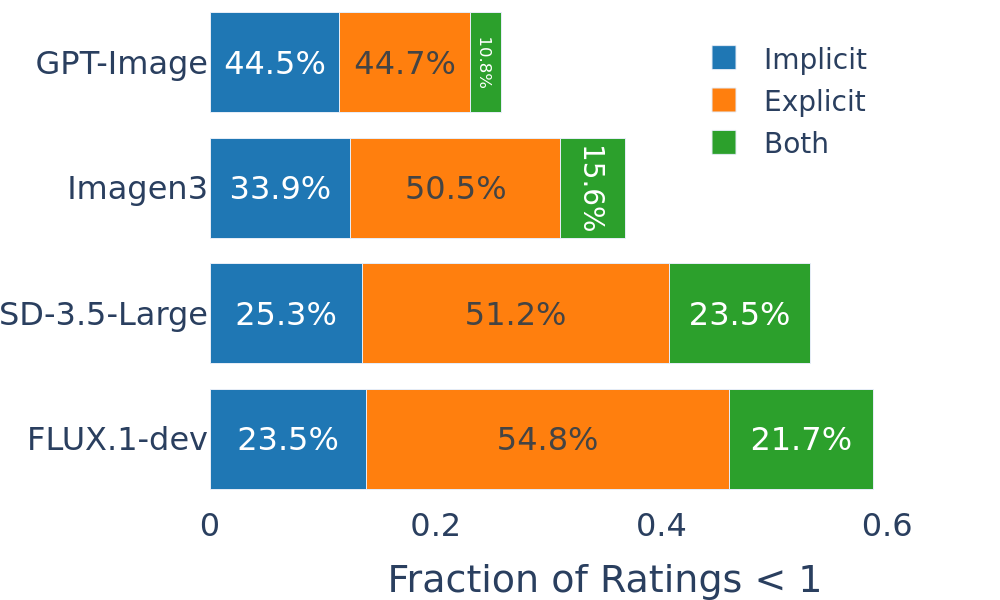

Human evaluations show GPT-Image leads in prompt alignment (0.85) and overall preference, with Imagen3 rated highest for image quality. Open-source models SD-3.5-Large and Flux lag, with SD performing worst due to low quality and higher stereotype rates. Stereotypical outputs occur in 10-16% of images, most for SD and least for Flux. Cross-country differences are notable, with Asian countries giving lower scores. Of all sub-perfect ratings, 50.3% are explicit errors, 31.2% implicit, and 17.9% both, showing persistent cultural nuance challenges.

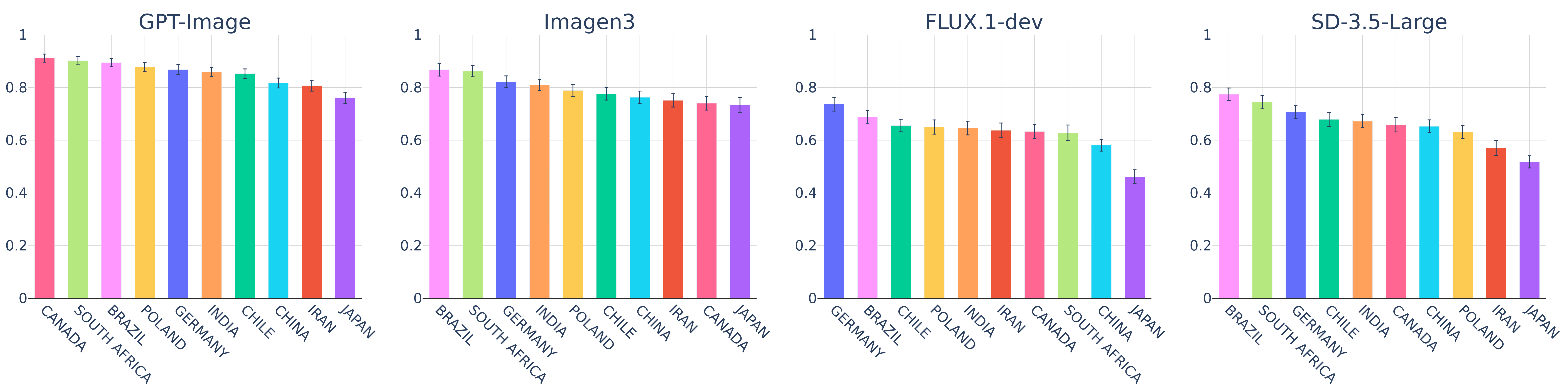

There is a disparity in model performance across countries, for different criteria. Images generated for Asian countries such as Japan and Iran generally have lower scores across all criteria. The plots below show performance metrics and error distributions across countries.

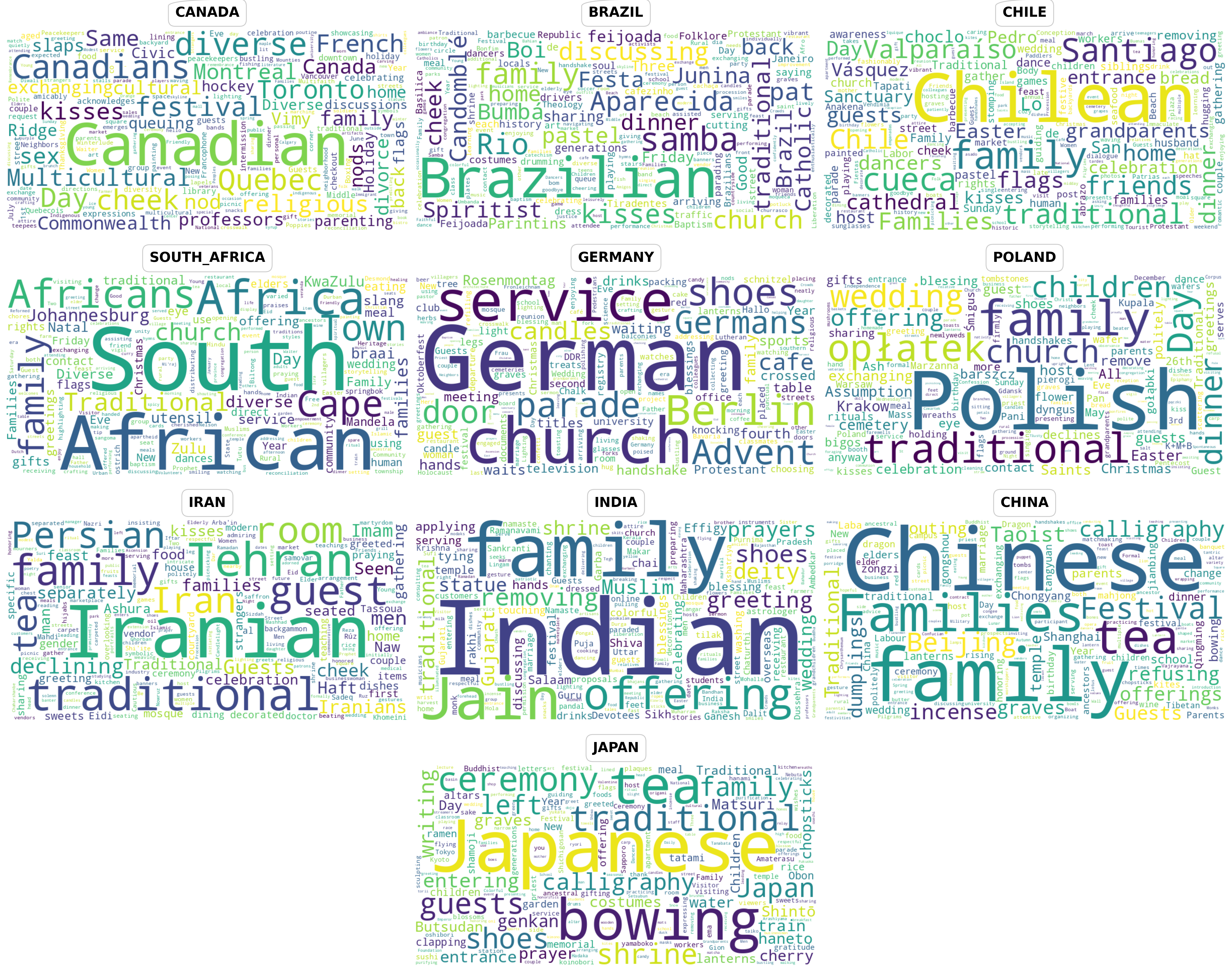

Words highlighted as problematic in prompts flagged by raters highlights two main error patterns. Country demonyms (e.g., Iranian, Brazilian) are often marked when an image lacks the expected country-specific element or when annotators cannot relate to its content. Other frequent errors involve broad cultural signifiers—such as rituals, social roles, and iconic objects—showing that T2I models often misrepresent these elements.

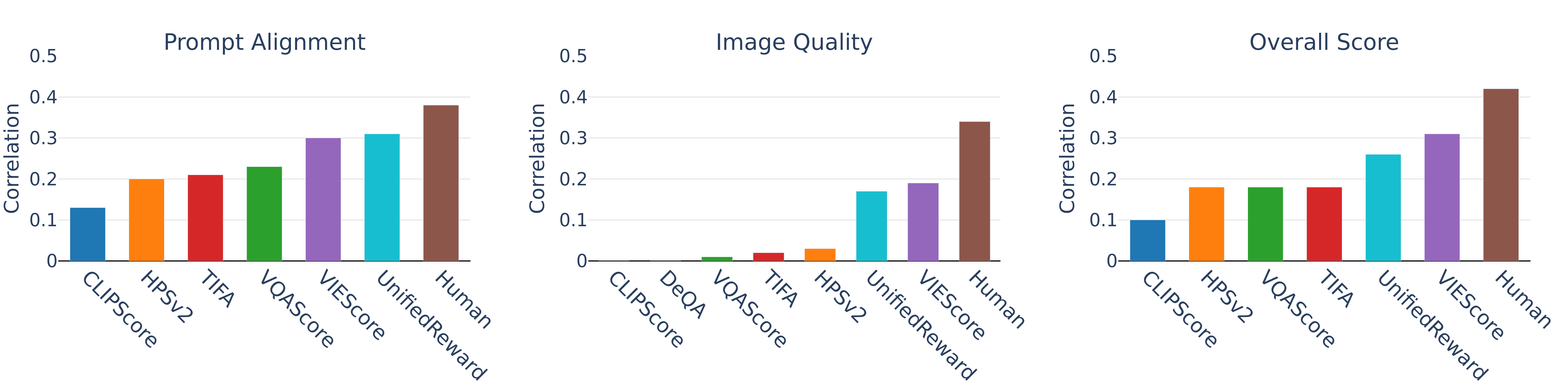

Metrics correlate poorly with human judgments. VIEScore and UnifiedReward show the strongest alignment, though still below human-human agreement. All metrics perform poorly on image quality. Overall, VLM-based metrics best capture culturally grounded preferences.

The reasons provided by automatic metrics are often not aligned with human judgments. Below we present some examples where automated evaluation metrics disagree with human cultural assessment.

Based on our analysis of cultural misalignment in text-to-image models and their evaluation metrics, we highlight three key directions for improvement.

We expand culturally implicit prompts in CulturalFrames by automatically adding missing cues (cultural objects, family roles, setting details, mood/atmosphere) based on our analysis of model failures. We generate images for the new prompts using Flux.1-Dev and evaluate alignment with VIEScore. This targeted, culturally informed expansion improves VIEScore from 7.3 for original prompts to 8.4 for the expanded prompts, showing that making implicit cues explicit helps better image generation.

We rewrote VIEScore's GPT-4o instructions using our human rater guidelines to make both implicit and explicit cues salient, then re-evaluated image-prompt alignment. This raised Spearman correlation with human ratings from 0.30 to 0.32 and improved explanation alignment from 2.19 to 2.37 (5-point scale). Carefully crafted, culturally informed instructions thus boost both scores and rationales.

We compare a preference-trained judge (UnifiedReward on Qwen2.5-VL-7B) to its backbone and find consistently higher correlations with human judgments. It even edges out GPT-4o-based VIEScore on alignment (0.31 vs 0.30). Preference-based judge training, even without culture-specific data, meaningfully improves cultural alignment of metric scores and CulturalFrames can be used to push this further.